A deepfake financial scam uses artificial intelligence to create convincing audio or video imitations of real people. Imagine receiving a video call from what looks and sounds like your company’s chief executive, asking you to authorize a money transfer. While the person appears genuine, the image and voice are computer-generated. The scam’s power lies in how easily trust can be manufactured.

Why Deepfakes Are Hard to Detect

Traditional scams often contained telltale mistakes—spelling errors, poor grammar, or low-quality images. Deepfakes erase many of those flaws. They can replicate facial expressions, speech tones, and even mannerisms. It’s like watching a magician perform a trick where the illusion feels seamless. Because the human brain is wired to trust familiar faces and voices, spotting deception becomes far more difficult.

How Scammers Use Deepfakes in Finance

Fraudsters combine deepfakes with existing scam tactics. In some cases, fake conference calls are staged to convince employees to release funds. In others, audio deepfakes are used in phone scams, imitating family members in distress. The financial world is particularly vulnerable because decisions often hinge on quick recognition of authority. A single convincing command from a “superior” can trigger large-scale losses.

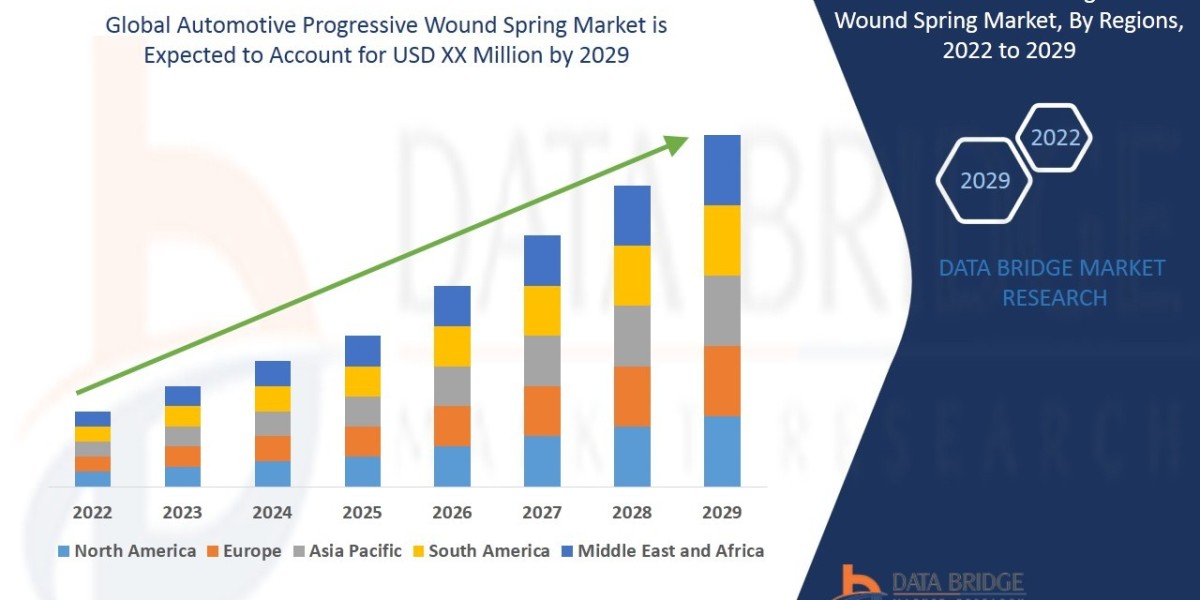

The Role of Data in Fueling Scams

Deepfakes don’t appear out of thin air—they rely on data. Short audio clips from public speeches or video snippets posted online are enough to train AI models. The more material available, the more convincing the result. This means that executives, influencers, and even ordinary users who post frequently online provide raw material for potential scams. Limiting unnecessary exposure of personal recordings becomes a simple form of Cybercrime Prevention.

Case Studies Highlighting Risks

Several incidents demonstrate the danger. In one reported case, criminals used AI-generated audio to impersonate a company director, leading to a fraudulent transfer of funds. Another involved fake recruitment interviews where deepfakes posed as candidates to gather sensitive information. While not every story makes headlines, analysts agree these cases represent the early stages of a trend that is likely to grow.

Defensive Measures at the Individual Level

For individuals, defense begins with skepticism. If a message or call requests urgent financial action, pause and verify. Instead of responding directly, contact the person through a secondary channel—such as a known phone number or secure internal chat. Updating passwords, enabling multi-factor authentication, and questioning unusual requests add layers of safety. Much like locking both the front and back doors of a house, multiple defenses reduce overall risk.

Organizational Strategies Against Deepfakes

Businesses face higher stakes and therefore require structured defenses. Policies that require dual approval for large transactions can limit damage. Training sessions should familiarize employees with the concept of deepfakes so they know such scams exist. Institutions like ncsc (the National Cyber Security Centre) emphasize proactive planning: incident response teams, verification protocols, and awareness campaigns are all essential tools.

Technology That Fights Back

Just as AI powers deepfakes, AI also helps detect them. Algorithms can analyze tiny inconsistencies in lip movements, background noise, or video compression. However, detection tools are not perfect, and criminals adjust quickly. Analysts recommend treating technological solutions as one part of a larger toolkit rather than a stand-alone fix. Think of it like a smoke alarm—it’s valuable, but it works best in combination with safe habits.

Limits of Current Defenses

Even with awareness and detection systems, no defense is flawless. Overconfidence in one tool or policy can create blind spots. Fraudsters thrive on surprise, shifting tactics whenever a new safeguard gains traction. The challenge is to avoid assuming that today’s defenses will hold tomorrow. Continuous adaptation is the only reliable principle.

Looking Ahead: Preparing for What’s Next

Deepfake financial scams illustrate a broader shift in cybercrime—the blending of psychological tricks with cutting-edge technology. Future scams may become indistinguishable from legitimate communications. But by layering defenses—education, policy, technology, and community reporting—individuals and institutions can reduce vulnerability. The path forward is not fear, but informed caution. If communities share experiences and update habits together, resilience grows stronger than deception.